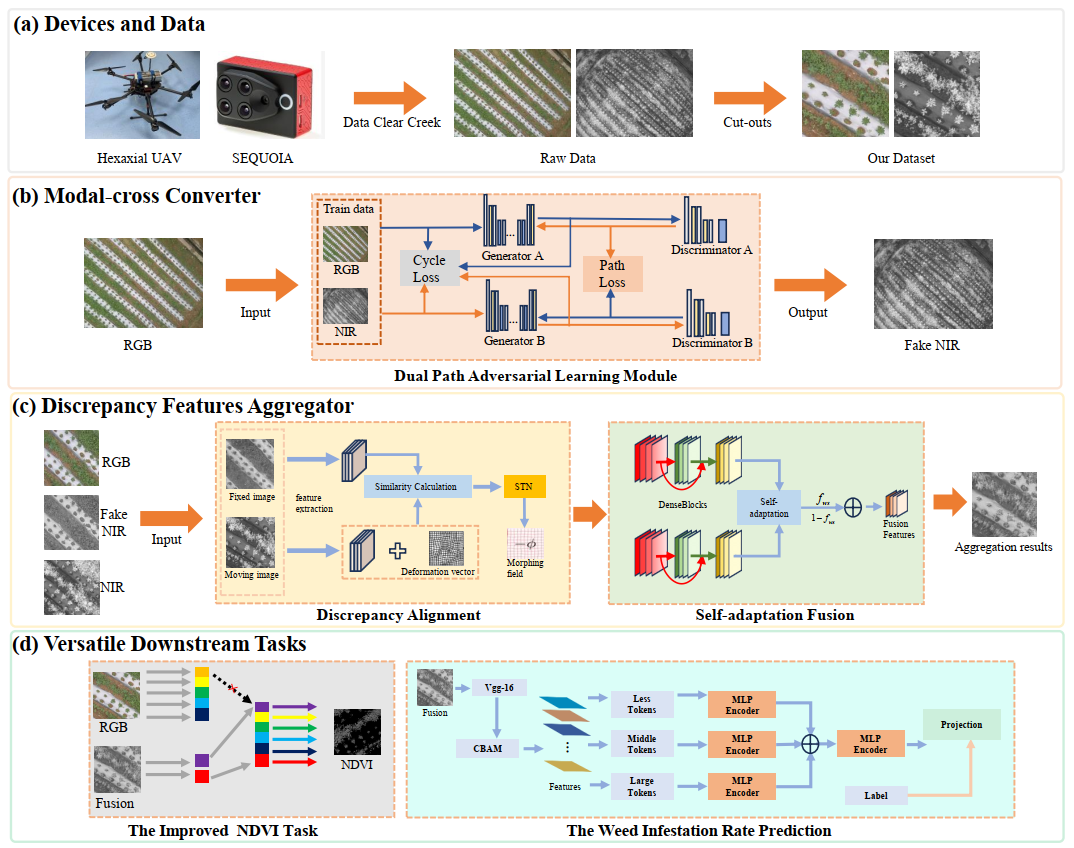

MWPS: RGB and infrared images cross-fusing support multimodal weed infestation rate prediction system. We used a UAV and a multispectral camera to collect weed data from a seedling-stage chili pepper field, and achieved rapid prediction of weed infestation rates by fusing visible and infrared images. Currently, there are two main problems in using multimodal data in the field of weed control: on the one hand, the problem of modal differences is still difficult to solve. On the other hand, due to the lack of remote field data, the existing weed identification methods are not generalizable. To address the above problems, we collected an early pepper weeds multimodal database (PWMD) containing 1495 pairs of visible and infrared images. Meanwhile, we also designed a multimodal weed infestation rate prediction system (MWPS), which eliminates the tedious manual processing in the early stage, and the predicted weed infestation rate is more convenient to assess the current level of weed damage in the field. Our MWPS consists of an image conversion module, an unsupervised two-path adaptive fusion module, and a weed infestation rate prediction module. The image conversion and image fusion modules can better mitigate modal differences and obtain high quality fusion results. The prediction module is easy to train and can quickly predict the weed infestation rate. After validation, our method obtains fused images with a visual effect (SSIM) of 0.82 and mutual information of 2.37, which are better than other unsupervised multimodal fusion methods. In the prediction module, we do not use the density map as a supervisory number, the average prediction time is 0.37 milliseconds, and the average error of the test is as low as 0.12.

Figure 1: Framework of the multimodal weed infestation rate prediction system. (a) Our data collection equipment, the process of data collection, and organization. (b) A schematic of our McC’s approach to modalizing visible images to infrared images using generative adversarial learning. (c) Our multi-scale multimodal discrepancy features aggregator (DFA) designed to avoid fusion artifacts and information redundancy. (d) A specific application scenario for the processed multimodal data. Here we performed vegetation segmentation and optimized the conventional counting module for the prediction of weed infestation rate in the field (WIRP).